Today, the Commission has adopted a new European strategy for a Better Internet for Kids (BIK+), to improve age-appropriate digital services and to ensure that every child is protected, empowered and respected online.

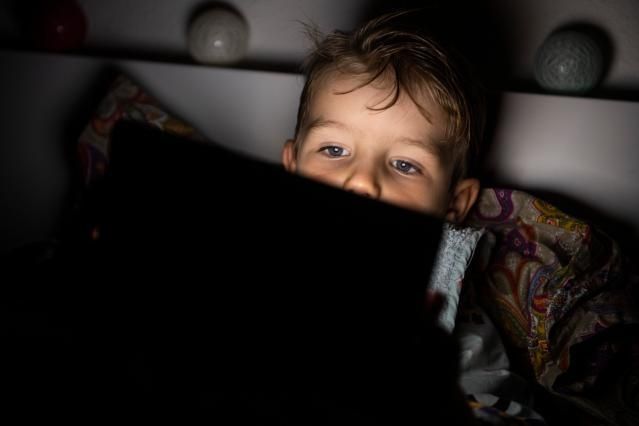

In the past ten years, digital technologies and the way children use them have changed dramatically. Most children use their smartphones daily and almost twice as much compared to ten years ago. They also use them from a much younger age (see EU Kids online 2020). Modern devices bring opportunities and benefits, allowing children to interact with others, learn online and be entertained. But these gains are not without risks, such as the dangers of exposure to disinformation, cyberbullying (see JRC study) or to harmful and illegal content, from which children need to be sheltered.

The new European strategy for a Better Internet for Kids aims for accessible, age-appropriate and informative online content and services that are in children’s best interests.

In addition, a new independent EU Centre on Child Sexual Abuse (EU Centre) will facilitate the efforts of service providers by acting as a hub of expertise, providing reliable information on identified material, receiving and analysing reports from providers to identify erroneous reports and prevent them from reaching law enforcement, swiftly forwarding relevant reports for law enforcement action and by providing support to victims.

The new rules will help rescue children from further abuse, prevent material from reappearing online, and bring offenders to justice. Those rules will include:

- Mandatory risk assessment and risk mitigation measures: Providers of hosting or interpersonal communication services will have to assess the risk that their services are misused to disseminate child sexual abuse material or for the solicitation of children, known as grooming. Providers will also have to propose risk mitigation measures.

- Targeted detection obligations, based on a detection order: Member States will need to designate national authorities in charge of reviewing the risk assessment. Where such authorities determine that a significant risk remains, they can ask a court or an independent national authority to issue a detection order for known or new child sexual abuse material or grooming. Detection orders are limited in time, targeting a specific type of content on a specific service.

- Strong safeguards on detection: Companies having received a detection order will only be able to detect content using indicators of child sexual abuse verified and provided by the EU Centre. Detection technologies must only be used for the purpose of detecting child sexual abuse. Providers will have to deploy technologies that are the least privacy-intrusive in accordance with the state of the art in the industry, and that limit the error rate of false positives to the maximum extent possible.

- Clear reporting obligations: Providers that have detected online child sexual abuse will have to report it to the EU Centre.

- Effective removal: National authorities can issue removal orders if the child sexual abuse material is not swiftly taken down. Internet access providers will also be required to disable access to images and videos that cannot be taken down, e.g., because they are hosted outside the EU in non-cooperative jurisdictions.

- Reducing exposure to grooming: The rules require app stores to ensure that children cannot download apps that may expose them to a high risk of solicitation of children.

- Solid oversight mechanisms and judicial redress: Detection orders will be issued by courts or independent national authorities. To minimise the risk of erroneous detection and reporting, the EU Centre will verify reports of potential online child sexual abuse made by providers before sharing them with law enforcement authorities and Europol. Both providers and users will have the right to challenge any measure affecting them in Court.

Sources:

Leave a Reply